I've been really interested in Jake Archibald's fastest F1 website series lately. I've enjoyed watching him dissect and test out improvements for speeding up their sites, and it inspired me to do something similar with a page that I'd noticed could be improved: Tesla's Cybertruck landing page.

I'd noticed there were a few things that might be slowing it down, and I wanted to dig a bit to see if I could speed it up. And hopefully you might find a few things that can be useful to look at for any site that you work on.

Reducing the CSS size

There are 3 CSS files requested in the <head>, one of which is 745kb (2.7MB uncompressed), another that's 93kb (281kb uncompressed) and a much smaller one at 1.2kb (only 1.6kb uncompressed).

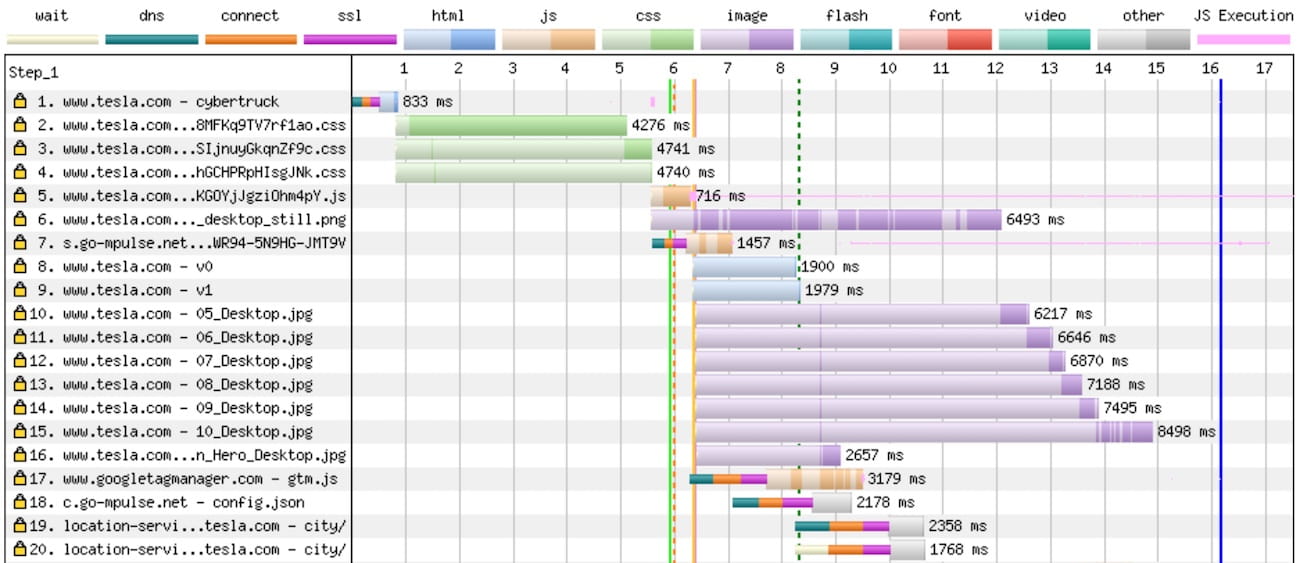

You can see them as requests 2, 3 and 4 in the waterfall chart above - and the first one in particular is a big concern.

Because all three files are CSS requests in the <head>, the browser won't start rendering anything until it's downloaded them, and 745kb is a hefty amount to have to download if you want a fast page. On a fast 3G connection, it's taking around 4 seconds just to download it.

The reason it's such a big file is because there are a load of fonts and images that have been encoded in base64 and added here.

There are two big drawbacks to adding base64 encoded fonts or images in your CSS. Firstly, base64 doesn't compress very well, so your gzip or brotli compressed CSS will be sent over the network at a higher file size than it needs to be.

Secondly, those fonts and images are now on your critical path - they now need to be downloaded before any content can be rendered, even if those fonts or images aren't used on that page.

You can find out much more detail on why base64 in your CSS is generally a bad idea from this post by Harry Roberts.

It would take hours for me to reverse-engineer the CSS to remove all of the fonts and images and put them into their own separate files, and - I won't lie - I'm not really willing to do that. But what I can do instead is see if I can lazy load any CSS that isn't critical.

Lazy loading non-critical CSS

I split out the critical CSS - the styles that were needed for the above-the-fold elements to load - and added them as critical.css, which I loaded normally.

The remaining CSS I put into a lazyloaded.css file, and then used Scott Jehl's method for lazy loading CSS.

It works by setting the media attribute to "print" (which tells the browser to only apply that stylesheet if the user tries to print the page), but then swaps that back to media="all" when it loads.

<link rel="stylesheet" href="style.css" media="print" onload="this.media='all'">It's also worth noting that my attempt here is pretty clumsy. The Cybertruck page has a ton of CSS, and it involved a lot of trial and error to figure out what is critical and what isn't - and I'm fairly certain that there's still a huge amount of non-critical styling that wound up in critical.css - but it's a start.

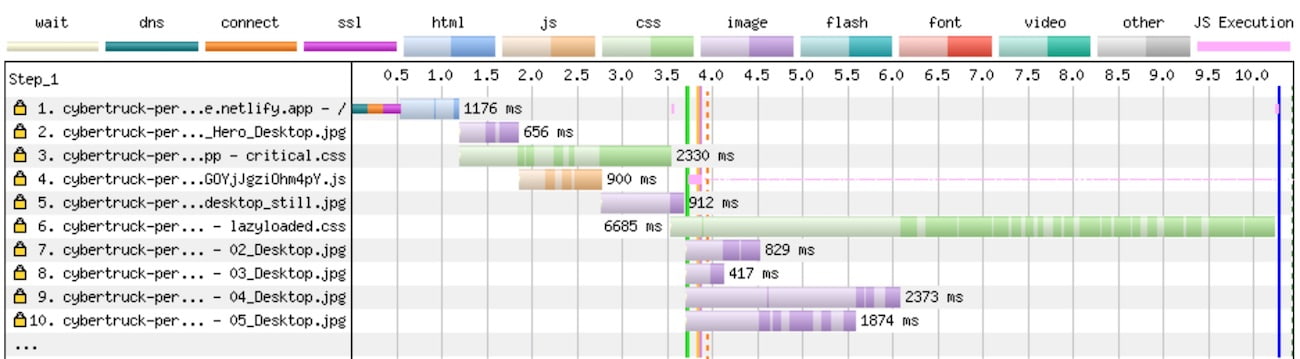

The critical CSS (request 3) now finishes several seconds earlier - because the bulk of that file has been pushed into the lazyloaded CSS on request 6, which no longer blocks rendering. It's still a massive file because the fonts and lots of images are included in it, but at least that CSS is no longer holding up the page from rendering.

Removing the anti-flicker snippet

The first thing in the <head> is the Google Optimize anti-flicker snippet. It's a small amount of JavaScript that hides the page (by showing a white background) until either the Optimize script has loaded, or 4 seconds have passed - whichever is soonest.

They're often suggested by client-side A/B testing frameworks as a way to get around cases where a visitor might see a control variant (a headline, the text on a button, etc) and then see it switch out for another. But when it comes to web performance, it's a bit of an anti-pattern because you're usually showing a user a blank page very early on in the page load, which isn't a great experience.

Andy Davies has written about the impact of anti-flicker snippets in much better detail than I'm able to, but for me - the advice would be to remove that snippet.

Reducing the video thumbnail image size

There's an autoplaying video below-the-fold which handily lazy loads - which is good, as it means it doesn't download the video early on in the page load, and instead waits until it's just about to scroll into view.

But it uses an image placeholder (the poster attribute for the <video> tag) - and that image placeholder is an 811kb PNG, and does load early.

Converting that image placeholder to a JPG and running it through Squoosh dropped that file size to a much more reasonable 33kb. You could go further and convert it to WebP too - but I chose not to, because I think 33kb is decent enough to see a performance improvement.

There are also (as you'd expect) quite a few other images used - some of which had reasonably high file sizes, often somewhere between 200kb and 400kb. I also used Squoosh for these to help reduce their sizes a bit, but I kept the quality fairly high (around 85%) because they're promotional images, and I wanted them to stay pretty sharp - but this helped to keep most of them below 100kb each.

Use native lazy loading for images

Images were loaded in using Lozad.js, but this itself was brought in from a late loading JavaScript file. This meant that all the images loaded in fairly late - even the images that are above the fold, like the hero image.

The lozad implementation that the Cybertruck page uses also seems to default to loading all the images in on page load, albeit late. I wanted to see whether switching to native browser lazy loading would improve things here, so I used the loading="lazy" attribute for all the below-the-fold images instead of lozad.

This worked really well for preventing images that are quite far below the fold from loading early on, and stealing bandwidth from more important resources.

Preloading the hero image

I normally try to avoid preloading more than I need to, but there were two main issues with the hero image loading in late. Initially, it wasn't immediately discoverable by the browser because of the lazy loading library that was used. Even after changing that to use eager loading, it was still competing for bandwidth with the lower priority lazyloaded.css, so I opted to use a preload to ensure that hero image was downloaded early.

To do that, I added this as a preload tag:

<link rel="preload" as="image" href="01_Main_Hero_Desktop.jpg">If you're going to implement this yourself - and have different desktop and mobile images - you can preload responsive images pretty easily - which is something that the Cybertruck landing page would also benefit from.

I'm always cautious with preloading images as it'll make it compete for other resources early on - but I think it worked pretty well in this case, where it would have been discovered very late otherwise.

What's the result?

I put up a test page on Netlify for the original Cybertruck landing page, with no changes made - and compared it to a version with the performance improvements added.

Using WebPageTest with a fast 3G connection led to a pretty noticeable improvement. It's now more than twice as fast - and the after version is visually complete before the before version starts rendering.